Sudo -u spark vi $SPARK_HOME/conf/hive-site.xml Spark configuration files Hive configuration Update the system environment file by adding SPARK_HOME and adding Spark_HOME/bin to the PATHĮxport SPARK_HOME=/usr/apache/spark-1.6.0-bin-hadoop2.6Ĭhange the owner of $SPARK_HOME to spark sudo chown -R spark:spark $SPARK_HOME Log and pid directories Step into the spark 1.6.0 directory and run pwd to get full path Sudo chown -R spark:spark spark-1.6.0-bin-hadoop2.6 Remove the tar file after it has been unpackedĬhange the ownership of the folder and its elements Sudo tar -xvzf spark-1.6.0-bin-hadoop2.6.tgz I have Hadoop 2.7.1, version 2.6 does the trick. Create the directory and step into it.ĭownload Spark 1.6.0 from. I am following their lead so I am installing all Apache services under /usr/apache. Hortonworks installs its services under /usr/hdp.

#Install apache spark on centos 6 install

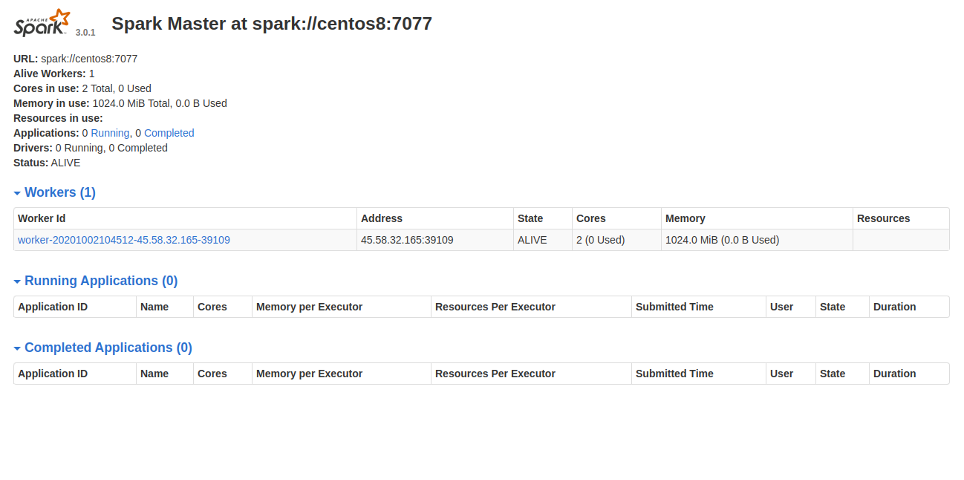

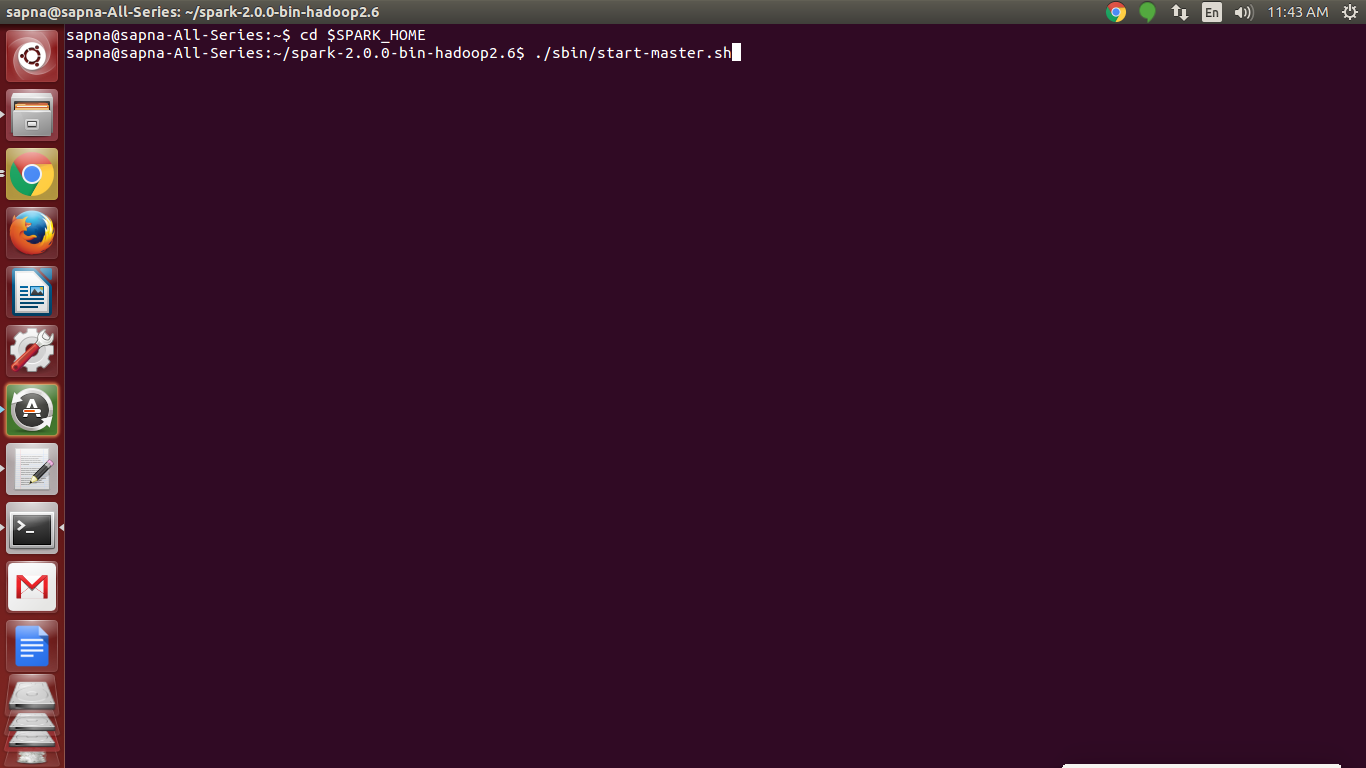

Spark installation and configuration Install SparkĬreate directory where spark directory is going to reside. Sudo -u hdfs hadoop fs -chown -R spark:hdfs /user/spark Sudo -u hdfs hadoop fs -mkdir -p /user/spark Sudo add-apt-repository ppa:openjdk-r/ppaĪdd JAVA_HOME in the system variables fileĮxport JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64Ĭreate user spark and add it to group hadoop Update and upgrade the system and install Java My post on setting up Apache Spark 2.0.0. The documentation on Spark version 1.6 is here. This is not the case for Apache Spark 1.6, because Hortonworks does not offer Spark 1.6 on HDP 2.3.4 My cluster has HDFS and YARN, among other services. The Spark in this post is installed on my client node. I am running a HDP 2.3.4 multinode cluster with Ubuntu Trusty 14.04 on all my nodes.

WARN ServletHandler: /api/v1/applications (1).Provision Apache Spark in AWS with Hashistack and Ansible.Streaming messages from Kafka to EventHub with MirrorMaker.Capturing messages in Event Hubs to Blob Storage.Zealpath and Trivago: case for AWS Cloud Engineer position.Automating access from Apache Spark to S3 with Ansible.Using Python 3 with Apache Spark on CentOS 7 with help of virtualenv.

0 kommentar(er)

0 kommentar(er)